Is correcting exposure misclassification bias an additional option in meta-analyses?

Article information

Key message

Systematic reviews and meta-analyses examine various existing research results. Such studies are conducted according to a technically determined algorithm to minimize errors. It is particularly important to understand basic analytical methods such as the fixed-effect and random-effects models and apply appropriate statistical techniques to verify interstudy heterogeneity. A design that eliminates possible bias from the early stages of the research in a step-by-step manner is required whenever possible.

In recent years, evidence-based studies, such as observational rather than cross-sectional, prospective rather than retrospective, multicenter rather than single-center, and randomized controlled clinical trials (RCTs), have demonstrated high abilities to ensure result validity and objectivity. However, research on the effects of environmental exposure such as smoke on offspring is not ethically available for RCTs. In prospective observational cohort studies, various confounders arise during the observation period [1]. When the results of several studies comparing the efficacy of various treatments yield different results, different methods are required to determine which treatment to ultimately select. In such cases, the meta-analysis can help minimize bias and determine guidelines or policies based on result objectivity and validity.

Meta-analyses require a systematic review of many studies; to reduce errors, the research is conducted according to the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) statement [2] or MOOSE (Meta-analysis Of Observational Studies in Epidemiology) standards [1]. Ayubi et al. [2] illustrated this process using a 27-item checklist and suggested a statistical method for correcting misclassification bias in the appendix to make it reproducible.

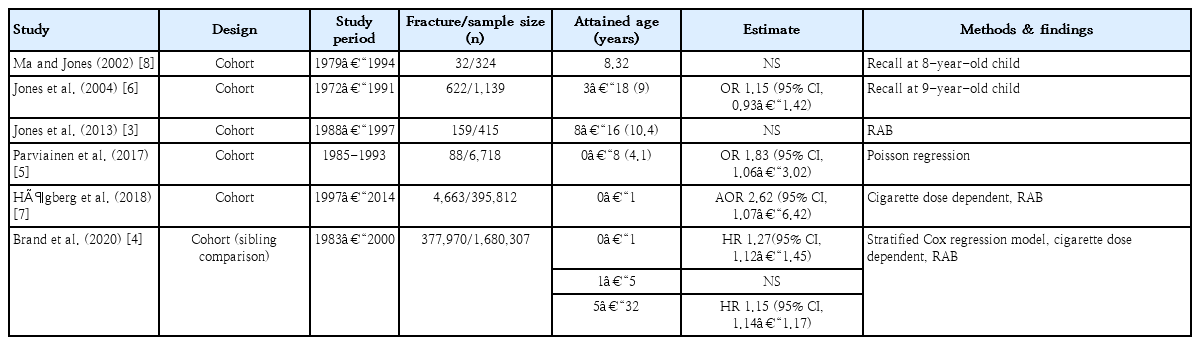

Many studies have examined maternal and child factors that affect fractures in childhood. Among them, smoking during pregnancy has various health hazards for the offspring such as preterm birth, intrauterine growth retardation, low birth weight [3,4], low bone mineral content [3,5], and delayed union or bone healing [6], making it a great public health concern. However, meaningful studies on the association between maternal smoking and childhood fractures are limited. Several recent studies [4,5,7] have achieved more precise results by using more detailed and extended analytical tools of the populations, interventions, comparators, and outcomes (Table 1) [3-8].

Two analytical methods are used in meta-analyses: the fixedeffect model, which considers the variance that only individual studies have; and the random-effects model, which considers inter- and intrastudy variance. The fixed-effect model is used when the research designs or methods are similar; the randomeffects model analyzes data assuming interstudy heterogeneity. Forest plot, Cochran Q-test, Higgins I2 statistics, and meta-regression are also used to confirm heterogeneity.

Retrospective or cross-sectional studies that focus on the effects of environmental exposure might misclassify specific substances or introduce bias by using recall or proxy interviews about exposure. Stratified and Bayesian analyses are used to correct for this error.

Bayesian analysis is a statistical reasoning method that predicts expected random variables based on past results. Bayesian analysis differs from classical sampling theory as follows: the parameter of interest is a random variable; a prior distribution and sample model are assumed; post-distribution is induced; and relevant past experience is applied to sample data. Since Bayesian analysis is used in connection with past data, it is useful in that it requires fewer data points than the classical sampling theory. It is also widely applied in ecology and sociology because the parameter of interest is an uncertain random variable.

Clair et al. [9] conducted a meta-analysis to determine whether smoking is a risk factor for diabetic polyneuropathy (DPN); 28 case-control/cross-sectional studies and 10 prospective cohort studies were analyzed separately for each study design using a random-effects model. However, even prospective studies showed high heterogeneity; when analyzed again by stratified analysis, higher quality and stronger association results between smoking and DPN were obtained. This resulted in highly heterogeneous outcomes of the studies included in the meta-analysis because actual smoking was denied, underestimated, or not considered.

Lian et al. [10] applied the concept of external validation data using real data from a meta-analysis of the effect of smoking on DPN. They synthesized 2 sets of analyses of the association between a misclassified exposure and an outcome (main studies) and the association between misclassified exposure and true exposure (validation studies) by using the extended Bayesian approach. Ayubi et al. [2] solved the errors based on the fact that smoke exposure was determined by the mother’s recall at the age of 8 or 9 years, indicating that the smoker group could be classified as a nonsmoker group, and drew meaningful results by using Bayesian analysis to correct for misclassification bias that may occur in a meta-analysis of the effects of exposure.

Meta-analyses have the advantage of reproducing the entire process and estimating precise results by combining all studies. However, the populations, interventions, and comparators should be verified beforehand to ensure sufficient interstudy consistency; the use of a statistical meta-analysis should be determined. Although a random-effects model for weighing smaller studies and a fixed-effect model for weighing larger samples or mixed-effect models for 3 different interventions were used, heterogeneity can occur among meaningful studies. If the I2 value exceeds 50%, heterogeneity is identified. In addition, a sensitivity or subgroup analysis and meta-regression must be considered to reduce bias.

Therefore, verification should be strictly performed at each stage to reduce all possible bias, and careful planning is particularly important for maintaining consistency since it reduces misclassification bias at the research design and registration stages.

Notes

No potential conflicts of interest relevant to this article are declared.